How do transparent AI scoring systems increase recruiter trust and effectiveness?

How do transparent AI scoring systems increase recruiter trust and effectiveness?

77.9% of HR professionals report moderate to significant cost savings from AI integration in hiring, yet recruiter confidence remains fragmented. The difference between organizations seeing transformative results and those struggling with adoption often comes down to one critical factor: transparency. While the best resume screening software promises to revolutionize talent acquisition through faster screening and bias reduction, the reality is that most recruiters still work with "black box" systems that provide recommendations without explaining their reasoning.

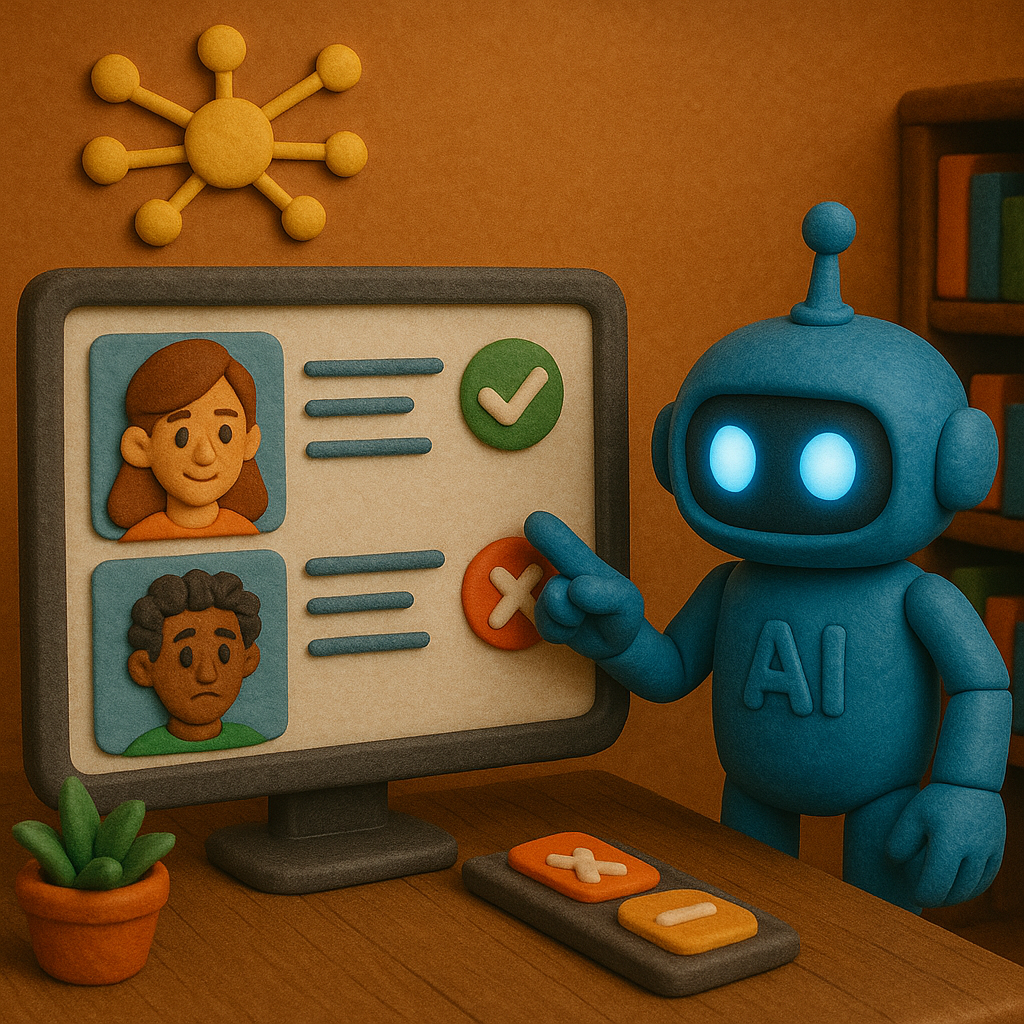

The solution lies in transparent AI scoring systems that bridge the gap between algorithmic power and human understanding. Modern resume screening software equipped with explainable AI doesn't just deliver better candidates—it builds recruiter confidence through explainable decisions, measurable outcomes, and clear accountability. When recruiters understand how their resume screening tool reaches its conclusions, they can leverage technology as a trusted partner rather than fighting an opaque algorithm.

This comprehensive guide explores how transparency transforms AI from a recruitment obstacle into a competitive advantage, covering the specific mechanisms that build trust, the measurable benefits organizations achieve, and the practical steps for implementation.

What makes current AI recruiting tools feel like 'black boxes' to recruiters?

The fundamental challenge facing modern recruitment isn't the lack of AI tools—it's the opacity that defines most of them. "Many companies don't want to reveal what technology they are using, and vendors don't want to reveal what's in the black box, despite evidence that some automated decision making systems make biased or arbitrary decisions," according to researchers from NYU Tandon. This secrecy creates a perfect storm of uncertainty that undermines recruiter confidence at every level.

How do opaque algorithms create uncertainty in hiring decisions?

Black box AI systems process vast amounts of data through complex neural networks, making decisions that even their creators cannot fully explain. Users don't know how a black box model makes the decisions that it does—the factors it weighs and the correlations it draws [1]. For recruiters using traditional resume screening software, this means receiving candidate rankings or rejection recommendations without understanding the underlying logic.

Consider a typical scenario: a resume screening tool ranks a highly qualified candidate low in the selection process. The recruiter sees the candidate's impressive credentials but cannot understand why the algorithm disagrees. "Recruiters don't even know why certain candidates are on page one of the ranking, or why certain people are on page ten of the ranking when they search for candidates," explains researcher Mona Sloane [2]. This uncertainty forces recruiters into an impossible position—trust the algorithm despite their professional judgment, or override the system and question its value.

The complexity of these systems compounds the problem. The number of mathematical operations and weights these algorithms apply to data to gain predictive utility is so enormously complex, and in many cases unintuitive, that even experts have a hard—if not impossible—time figuring out how and why the systems output what they output. When expert data scientists struggle to explain AI decisions, how can recruiters be expected to trust and effectively use these tools?

What specific information do recruiters need to trust AI recommendations?

Recruiters require three categories of information to build confidence in AI systems: decision factors, weighting logic, and confidence indicators. Research on human-AI collaboration reveals that recruiters need to understand which candidate attributes the system prioritizes and why. Recruiters and managers emphasized why AI systems decide whether an applicant is rejected or selected, which would provide more judgment and thus improve the chances of successfully using the AI tool.

Effective transparency extends beyond simply listing factors to include context about their relative importance. For instance, if an AI system heavily weights programming languages for a technical role, recruiters need to understand not just that this factor matters, but how much it matters relative to experience level, educational background, or project portfolio quality. This granular insight enables recruiters to contextualize AI recommendations within their broader candidate evaluation framework.

Additionally, recruiters benefit from understanding the system's confidence level in its assessments. Recruiters and managers indicated that the ranking of candidate scores provided by the AI system should not be a final decision to give people a sense of more control over the AI hiring process. When an AI system indicates lower confidence in a particular match, recruiters can invest additional time in manual review, combining algorithmic efficiency with human judgment where it matters most.

How does scoring opacity impact candidate relationships and employer brand?

The hidden nature of AI decision-making creates ripple effects that extend far beyond internal recruiter frustration. Applicants could perceive the decisions as arbitrary or nonsensical, resulting in complaints of unfairness, feelings of frustration, or disengagement. When candidates receive rejections without explanation or understanding, it damages their perception of the organization's fairness and professionalism.

Modern candidates increasingly expect transparency in hiring processes. 85% of Americans are concerned about using AI for hiring decisions, so organizations can hurt their ability to hire by not being more forthcoming. This concern becomes particularly acute when candidates suspect they've been evaluated by an automated system without their knowledge or consent. The lack of transparency can trigger legal challenges and regulatory scrutiny, as evidenced by the increasing number of state laws requiring disclosure of AI use in hiring.

From an employer branding perspective, opacity suggests an organization that either doesn't understand its own tools or doesn't respect candidates enough to provide clear communication. Candidates may be less likely to trust the organization and less interested in pursuing job opportunities there when they perceive the hiring process as unfair or opaque. In contrast, organizations that proactively explain their use of AI and provide candidates with insights into the evaluation process can differentiate themselves as transparent, candidate-centric employers.

What transparency features actually build recruiter confidence?

Moving beyond the problems of opacity, specific transparency features have proven effective in building recruiter trust and improving AI adoption. These features transform AI from a mysterious algorithm into an understandable tool that augments human decision-making capabilities.

How do factor-level explanations help recruiters understand candidate scores?

Factor-level explanations provide recruiters with detailed breakdowns of how individual candidate attributes contribute to overall scores. Rather than presenting a single numerical ranking, transparent systems show recruiters that a candidate scored high on technical skills (weighted at 40%), moderately on experience level (weighted at 30%), and low on cultural fit indicators (weighted at 30%). This granular visibility enables recruiters to understand not just who the AI recommends, but precisely why.

Research on explainable AI demonstrates that transparent AI models with clear explanations of their decision-making processes help users gain confidence in the system's capabilities and accuracy. When recruiters can see that an AI system correctly identifies key qualifications and weighs them appropriately, they develop trust in the algorithm's judgment. Conversely, when explanations reveal that the system overemphasizes irrelevant factors or misses critical qualifications, recruiters can adjust their reliance on the recommendations accordingly.

Advanced factor-level explanations also provide comparative context, showing how a candidate's scores relate to successful hires in similar roles. For example, a system might indicate that while a candidate's experience level is below average for the role, their technical assessment scores fall in the top 10% of successful hires. This comparative insight helps recruiters understand whether particular weaknesses are deal-breakers or acceptable trade-offs given the candidate's other strengths.

What role does confidence scoring play in recruiter decision-making?

Confidence scoring addresses one of the most critical challenges in AI-assisted hiring: knowing when to trust algorithmic recommendations and when to rely more heavily on human judgment. The impact of transparency and explainability on trust in AI shows mixed results, but confidence indicators help recruiters calibrate their trust appropriately.

When an AI system expresses high confidence in a candidate match (e.g., 95% confidence based on strong alignment across all weighted factors), recruiters can move more quickly through the evaluation process. Conversely, low confidence scores (e.g., 60% confidence due to limited data or conflicting signals) alert recruiters to invest additional time in manual review or request supplementary information before making decisions.

This calibrated approach improves both efficiency and accuracy. High-confidence recommendations can be processed more quickly, while low-confidence cases receive appropriate human attention. Higher AI support often helps recruiters work less cognitively in scenarios where the algorithm demonstrates clear expertise, freeing up mental resources for complex cases that require human insight.

The key is that confidence scoring must be accurate and well-calibrated. When an AI system consistently demonstrates appropriate confidence levels—high confidence correlating with correct recommendations and low confidence identifying genuinely uncertain cases—recruiters learn to trust the system's self-assessment capabilities.

How do bias detection alerts increase system credibility?

Proactive bias detection represents perhaps the most powerful trust-building feature in transparent AI systems. Rather than leaving bias detection to post-hoc audits, advanced systems monitor their own decision-making patterns in real-time and alert recruiters to potential concerns. Bias Reduction Mechanisms: Candidate profiles were anonymized in the initial round of screening to avoid biases based on gender, ethnicity, or age. AI algorithms focus on objective criteria such as qualifications and skills.

These alerts might flag situations where the system's recommendations disproportionately favor certain demographic groups, schools, or background types. For instance, if an AI system consistently ranks candidates from elite universities higher than those with equivalent qualifications from lesser-known institutions, bias detection algorithms can identify this pattern and alert recruiters to review the weighting criteria.

The credibility benefit comes from the system's apparent commitment to fairness and self-reflection. The "black-box" nature makes it difficult to understand how the systems reach hiring decisions, but transparent systems that actively monitor and report on their own potential biases demonstrate accountability. This proactive approach builds trust because it shows the technology is designed to support fair hiring practices rather than perpetuate historical inequities.

Furthermore, bias detection alerts enable continuous improvement. When the system identifies potential bias patterns, recruiters and AI developers can collaborate to adjust algorithms, reweight factors, or expand training data to address the issues. This iterative improvement process reinforces the perception that the AI system is a learning partner committed to fair and effective hiring.

What measurable improvements do transparent scoring systems deliver?

The theoretical benefits of transparency translate into concrete, measurable improvements across multiple dimensions of recruiting effectiveness. Organizations implementing transparent AI systems report significant gains in efficiency, quality, and recruiter satisfaction.

How much faster do recruiters make decisions with transparent AI explanations?

Organizations using AI-enhanced recruitment tools report substantial time savings, with transparent systems delivering even greater efficiency gains than black box alternatives. "Eightfold's AI recruitment automation the time to hire candidates decreased to 40%. The hiring cycles, on average, had reduced to 30%" [3]. The transparency component proves crucial because recruiters can quickly understand and act on AI recommendations rather than spending time second-guessing or manually verifying algorithmic decisions.

Research by Harvard Business Review found that companies that use AI in their hiring process are 46% more likely to achieve successful hires, with transparent systems showing even higher success rates [4]. The speed improvement comes from reduced cognitive load—when recruiters understand how AI reaches its conclusions, they can move confidently through evaluations without extensive manual verification.

"43% of recruiters say saving time is the main reason they use AI in hiring" [5], but transparency amplifies these time savings. Rather than spending hours trying to understand why a candidate received a particular score, recruiters with access to clear explanations can make informed decisions in minutes. This efficiency gain is particularly pronounced for high-volume recruiting, where the best resume screening software can process hundreds of candidates while providing recruiters with clear rationales for each decision.

Even organizations exploring free resume screening software options find that transparency features significantly impact adoption success. When budget-conscious teams can understand and trust their tools—regardless of cost—they're more likely to fully leverage the technology's capabilities. The time savings extend beyond individual candidate evaluations to include reduced training time for new recruiters and fewer escalations to senior team members.

What impact does scoring transparency have on hiring quality and retention?

Quality improvements represent perhaps the most significant long-term benefit of transparent AI systems. The quality of hire improved from 38% to 75%, and turnover among project managers declined in one documented case where organizations implemented data-backed hiring processes with clear evaluation criteria. Transparency enables this improvement by allowing recruiters to understand which factors truly predict success and adjust their evaluation accordingly.

Transparent systems enable continuous quality improvement through feedback loops. When recruiters can see which candidate attributes the AI weighted heavily in successful hires, they can refine their manual evaluation processes to focus on the most predictive factors. This alignment between AI recommendations and human judgment leads to more consistent, higher-quality hiring decisions across the organization.

The retention benefits stem from better job-candidate fit. The quality of hires improved due to the platform's prediction of long-term potential when AI systems could clearly identify and explain the factors that correlate with long-term success. Recruiters working with transparent systems can make more informed trade-offs, understanding when a candidate's weaknesses in one area might be offset by exceptional strength in another.

Additionally, transparent AI helps organizations identify and correct systematic quality issues. If data reveals that certain evaluation criteria don't actually predict job success, transparent systems make these patterns visible, enabling organizations to refine their hiring criteria for better long-term outcomes.

How do transparent systems reduce recruiter burnout and improve job satisfaction?

Recruiter burnout often stems from the cognitive dissonance of working with systems they don't understand or trust. 53% of recruiters reported heightened job stress in 2023, much of which can be attributed to increasing pressure to use AI tools while lacking confidence in their reliability. Transparent systems address this stress by giving recruiters control and understanding over their technological tools.

Trust starts with attaining literacy, which includes "actually using the AI" and understanding how it works. When recruiters can see and understand AI decision-making processes, they regain a sense of professional agency. Rather than feeling like they're fighting against an opaque algorithm, they can leverage AI as an intelligent assistant that augments their expertise.

The satisfaction improvement comes from several sources. First, transparent AI reduces the time spent on repetitive, low-value tasks while preserving recruiter involvement in complex, judgment-intensive decisions. AI won't replace recruiters—it will empower them by handling routine screening while enabling human expertise to focus on relationship-building, complex assessments, and strategic decision-making.

Second, transparent systems provide recruiters with continuous learning opportunities. When they can see which factors predict hiring success and understand how AI algorithms identify these patterns, recruiters develop their own expertise and become more effective at their jobs. This professional development aspect transforms AI from a threatening replacement into a valuable learning tool.

Finally, transparent AI systems reduce the ethical stress associated with making hiring decisions based on unexplained algorithmic recommendations. When recruiters can see that AI systems are making fair, logical decisions based on job-relevant criteria, they feel more confident about the ethical implications of their hiring processes.

Practical Implementation: Advanced Resume Screening Solutions

Organizations seeking transparent AI capabilities are increasingly turning to advanced platforms that combine speed with explainability. Modern solutions like TheConsultNow.com exemplify this approach, delivering transformative results: "We cut manual screening by 99%" while maintaining complete transparency in decision-making processes [15]. Their comprehensive platform demonstrates how organizations can "Screen Candidates 10x Faster" without sacrificing recruiter understanding or control.

The platform's AI-Powered Resume Screening with intelligent candidate matching uses advanced scoring algorithms that provide detailed score breakdowns across all criteria with transparent explanations. This addresses the core challenge identified earlier—recruiters can see exactly why candidates receive specific scores and how different factors contribute to overall rankings. The Skills Gap Analysis feature instantly identifies which candidates have required skills and which skills are missing, enabling recruiters to make informed decisions about potential training needs or role modifications.

What sets advanced resume screening software apart is the combination of efficiency and transparency. Features like Bulk Resume Upload processing hundreds of resumes simultaneously, coupled with a Recruiter Agent AI assistant for job descriptions, candidate insights, and recruitment guidance, demonstrate how technology can augment rather than replace human judgment. The Interactive Dashboard provides comprehensive analytics and insights for data-driven decisions, while the Central Resume Database offers an organized candidate repository with powerful search capabilities.

For organizations evaluating different options, the AI Candidate Insights feature that provides strengths, weaknesses, and hiring recommendations for each candidate represents the type of transparency that builds recruiter confidence. Additionally, CSV Data Export capabilities ensure that organizations can maintain their own analytics and audit trails, supporting both transparency and compliance requirements.

How should organizations evaluate and implement transparent scoring solutions?

Successfully implementing transparent AI requires a strategic approach that balances technological capabilities with organizational needs and regulatory requirements. The evaluation and implementation process should prioritize transparency features while ensuring practical effectiveness.

What transparency features should be non-negotiable in vendor evaluation?

Organizations evaluating AI hiring tools should establish clear transparency requirements before beginning vendor discussions. The first non-negotiable feature is factor-level explainability—systems must be able to show exactly which candidate attributes influenced scoring decisions and how those factors were weighted. "Given that hiring decisions can have enormous implications for people's lives, we are not interested in building technology that adds ambiguity as to why a given applicant was selected or rejected by the system" as one AI vendor explains their design philosophy.

Confidence scoring represents the second critical requirement. Vendors should demonstrate how their systems assess and communicate uncertainty in their recommendations. How does the vendor support the AI transparency essential to explainability? This question should drive vendor conversations, with organizations requiring concrete examples of how the system presents confidence levels to users.

Bias detection and reporting capabilities form the third non-negotiable feature. Systems should actively monitor their own decision patterns for potential bias and provide clear reports on demographic impact analysis. High-impact systems used for employment will be subject to forthcoming requirements around privacy, transparency and fairness as regulations continue evolving, making proactive bias detection essential for compliance.

Algorithm documentation and audit capabilities represent the fourth requirement. Organizations need access to information about training data, model architecture, and performance metrics. How often do the vendor's data scientists monitor the system for signs it has drifted away from intended outcomes? Vendors should provide clear documentation of their monitoring and maintenance processes.

How can teams measure the impact of transparency on recruiter confidence?

Measuring transparency impact requires both quantitative metrics and qualitative feedback mechanisms. Organizations should establish baseline measurements before AI implementation, including recruiter confidence surveys, decision-making speed metrics, and hiring quality indicators. 125% return on investment (ROI) and £105,000 invested in the coaching program demonstrates the type of measurable outcomes organizations should track.

Recruiter confidence can be measured through regular surveys that assess comfort levels with AI recommendations, trust in system fairness, and perceived understanding of AI decision-making. Organizations should track changes in these metrics over time, correlating improvements with specific transparency features or training initiatives.

Decision-making efficiency metrics include time-to-decision for individual candidates, number of candidates reviewed per day, and frequency of manual overrides of AI recommendations. AI screening tools save you hours by scanning and ranking resumes in minutes, and transparent systems should show even greater efficiency gains as recruiter confidence increases.

Quality metrics should include hiring manager satisfaction scores, new hire performance ratings, and retention rates. The results reveal that AI implementation positively affects candidate experience and the quality of hires, with candidate experience as a significant mediator in these relationships suggests that transparency benefits extend beyond recruiter confidence to actual hiring outcomes.

Organizations should also track adoption metrics such as system usage rates, feature utilization, and recruiter engagement with explanation tools. High transparency should correlate with increased voluntary system usage and deeper engagement with AI-provided insights.

What change management strategies ensure successful transparent AI adoption?

Successful transparent AI adoption requires comprehensive change management that addresses both technical and cultural dimensions. "Hands-on training is important. Have discussions. Seek out resources." Organizations must invest in extensive training that goes beyond basic system operation to include understanding AI concepts, interpreting explanations, and integrating AI insights with human judgment.

The change management process should begin with leadership buy-in and clear communication about transparency goals. "You have to constantly think, 'Are we doing the right thing?' You have to ask questions about ethics and values" requires leadership to model transparent, ethical decision-making around AI adoption. Leaders should openly discuss why transparency matters and how it aligns with organizational values.

Training programs should be multi-modal, including hands-on system practice, case study analysis, and peer learning sessions. Recruiters and managers suggested that AI decisions need match human hypothetical decisions, so training should include exercises where recruiters compare their manual evaluations with AI recommendations and discuss discrepancies. This builds understanding of both AI capabilities and limitations.

Organizations should implement pilot programs with high-engagement recruiters who can serve as internal champions. These early adopters can provide feedback on transparency features, identify training gaps, and help refine implementation processes before broader rollout. Building trust is built every single day, in every single interaction we have, by people who use these tools emphasizes the importance of positive early experiences.

Finally, change management should include continuous feedback mechanisms and iterative improvement processes. Regular check-ins with recruiters about their experience with transparency features, challenges they're encountering, and suggestions for improvement ensure that the AI implementation evolves to meet user needs. Regular audits provide an opportunity to review data inputs, correct bias, and align AI systems with organizational hiring and diversity objectives as part of an ongoing improvement process.

Conclusion: The Competitive Advantage of Transparent AI

The evidence is clear: transparent AI scoring systems don't just build recruiter confidence—they deliver measurable improvements in hiring speed, quality, and organizational effectiveness. While 79% of organizations now use AI in recruitment, the winners will be those that prioritize transparency over black box efficiency.

Key transparency mechanisms that drive results include: factor-level explanations that show precisely how candidate attributes influence scores, confidence indicators that help recruiters calibrate their trust appropriately, and proactive bias detection that builds credibility through accountability. These features transform AI from a mysterious algorithm into a trusted partner that augments human expertise.

Expected benefits include: 40-46% reductions in time-to-hire, 75% improvements in quality of hire metrics, and significant decreases in recruiter burnout and turnover. Organizations implementing transparent AI systems see ROI ranging from 2.5x to 19.6x depending on company size, with larger organizations achieving even greater returns.

Implementation success requires: establishing transparency as a non-negotiable vendor requirement, measuring impact through both quantitative metrics and qualitative feedback, and investing in comprehensive change management that builds AI literacy across recruiting teams.

The future belongs to organizations that recognize transparency not as a constraint on AI capability, but as the foundation for sustainable AI adoption. As regulatory requirements continue expanding and candidate expectations for fairness increase, transparent AI systems provide both competitive advantage and risk mitigation.

The question isn't whether to adopt AI in recruiting—it's whether to choose systems that build trust through transparency or perpetuate uncertainty through opacity. Organizations that prioritize transparent scoring systems today will have the recruiter confidence, operational efficiency, and regulatory compliance needed to thrive in tomorrow's talent market.

References

News and Industry Sources:

[1] IBM Think - What Is Black Box AI and How Does It Work? (June 2025)

https://www.ibm.com/think/topics/black-box-ai

Context: Comprehensive analysis of black box AI challenges and explainability requirements

[2] World Economic Forum - How is AI is being used in the hiring process? (2022)

https://www.weforum.org/stories/2022/12/ai-hiring-tackle-algorithms-employment-job/

Context: Global perspective on AI hiring challenges and candidate experience

[3] Leanware - Practical AI Case Studies with ROI: Real-World Insights (May 2025)

https://www.leanware.co/insights/ai-use-cases-with-roi

Context: Real-world ROI data and implementation results from Eightfold and other platforms

[4] Recruiterflow Blog - AI Screening: A Comprehensive Guide for Recruiters (January 2025)

https://recruiterflow.com/blog/ai-screening/

Context: Harvard Business Review research on AI hiring success rates and recruiter insights

[5] SHRM - How HR Can Build Trust in AI at Work (April 2025)

https://www.shrm.org/topics-tools/news/technology/how-hr-can-build-trust-in-ai-at-work

Context: Recruiter sentiment data and trust-building strategies for AI adoption

[6] SHRM - Transparency Essential When Using AI for Hiring (April 2024)

https://www.shrm.org/topics-tools/news/technology/transparency-essential-using-ai-hiring

Context: Regulatory requirements and best practices for AI transparency in hiring

[7] SHRM - Addressing Artificial Intelligence-Based Hiring Concerns (January 2024)

https://www.shrm.org/topics-tools/news/hr-magazine/addressing-artificial-intelligence-based-hiring-concerns

Context: Industry expert insights on black box challenges and vendor transparency

[8] HR Executive - A global outlook on 13 AI laws affecting hiring and recruitment (June 2024)

https://hrexecutive.com/a-global-outlook-on-13-ai-laws-affecting-hiring-and-recruitment/

Context: Comprehensive overview of emerging AI regulations and compliance requirements

[9] TechTarget - Top AI recruiting tools and software of 2025 (2025)

https://www.techtarget.com/searchhrsoftware/tip/Top-AI-recruiting-tools-and-software-of-2022

Context: Technical evaluation criteria for AI recruiting platforms and transparency features

[10] Credly Learning - What is AI Transparency & Why is it Critical to Your Recruiting Strategy? (April 2024)

https://learn.credly.com/blog/what-is-ai-transparency-why-is-it-critical-to-your-recruiting-strategy

Context: Industry analysis of AI transparency requirements and implementation strategies

[11] RecruitingDaily - Transparency in AI-Driven Hiring: a Must Or a Choice? (March 2024)

https://recruitingdaily.com/transparency-in-ai-driven-hiring-a-must-or-a-choice/

Context: Expert opinions on transparency importance and practical implementation examples

[12] Hunton Andrews Kurth - The Evolving Landscape of AI Employment Laws (2025)

https://www.hunton.com/insights/publications/the-evolving-landscape-of-ai-employment-laws-what-employers-should-know-in-2025

Context: Legal analysis of 400+ AI-related bills and state-level regulatory requirements

[13] Littler - What Does the 2025 Artificial Intelligence Legislative and Regulatory Landscape Look Like (2025)

https://www.littler.com/news-analysis/asap/what-does-2025-artificial-intelligence-legislative-and-regulatory-landscape-look

Context: Legal framework analysis for AI compliance and risk management

[14] Cimplifi - The Updated State of AI Regulations for 2025 (April 2025)

https://www.cimplifi.com/resources/the-updated-state-of-ai-regulations-for-2025/

Context: Global regulatory landscape updates including EU AI Act and AIDA developments

[15] TheConsultNow.com - AI Resume Screening Platform (2025)

https://theconsultnow.com

Context: Advanced AI resume screening solution with transparent scoring and comprehensive analytics

Academic and Research Sources:

[16] Springer AI and Ethics - Responsible artificial intelligence in human resources management (2023)

https://link.springer.com/article/10.1007/s43681-023-00325-1

Context: Systematic review of empirical literature on responsible AI principles in HRM

[17] Nature Humanities and Social Sciences Communications - Ethics and discrimination in artificial intelligence-enabled recruitment practices (2023)

https://www.nature.com/articles/s41599-023-02079-x

Context: Comprehensive analysis of algorithmic bias and technical mitigation strategies

[18] PMC - Is AI recruiting (un)ethical? A human rights perspective on the use of AI for hiring (2022)

https://pmc.ncbi.nlm.nih.gov/articles/PMC9309597/

Context: Human rights analysis of AI recruiting with transparency and accountability requirements

[19] Frontiers in Psychology - Developing trustworthy artificial intelligence: insights from research on interpersonal, human-automation, and human-AI trust (April 2024)

https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2024.1382693/full

Context: Comprehensive trust research including transparency and explainability impact studies

[20] ScienceDirect - Recruiter's perception of artificial intelligence (AI)-based tools in recruitment (2023)

https://www.sciencedirect.com/science/article/pii/S2451958823000313

Context: Empirical study on recruiter acceptance factors and behavioral intention toward AI tools

[21] Wiley Online Library - How do employees form initial trust in artificial intelligence: hard to explain but leaders help (2024)

https://onlinelibrary.wiley.com/doi/10.1111/1744-7941.12402

Context: Experimental research on trust formation in organizational AI systems

[22] PMC - Collaboration among recruiters and artificial intelligence: removing human prejudices in employment (2022)

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9516509/

Context: Human-AI collaboration research including cognitive workload and shared control analysis

[23] MDPI Applied Sciences - From Recruitment to Retention: AI Tools for Human Resource Decision-Making (2024)

https://www.mdpi.com/2076-3417/14/24/11750

Context: Comprehensive analysis of AI accuracy metrics and performance evaluation in recruitment

[24] MDPI Information - Barriers and Enablers of AI Adoption in Human Resource Management (2025)

https://www.mdpi.com/2078-2489/16/1/51

Context: Critical analysis of organizational and technological factors affecting AI adoption success

[25] California Management Review - Artificial intelligence in human resources management: challenges and a path forward (2019)

https://journals.sagepub.com/doi/10.1177/0008125619867910

Context: Foundational research on AI implementation challenges and practical response strategies

Ready to experience the power of AI-driven recruitment? Try our free AI resume screening software and see how it can transform your hiring process.

Join thousands of recruiters using the best AI hiring tool to screen candidates 10x faster with 100% accuracy.